18. Network Structure

How can you decide on a network structure?

At this point, deciding on a network structure: how many layers to create, when to include dropout layers, and so on, may seem a bit like guessing, but there is a rationale behind defining a good model.

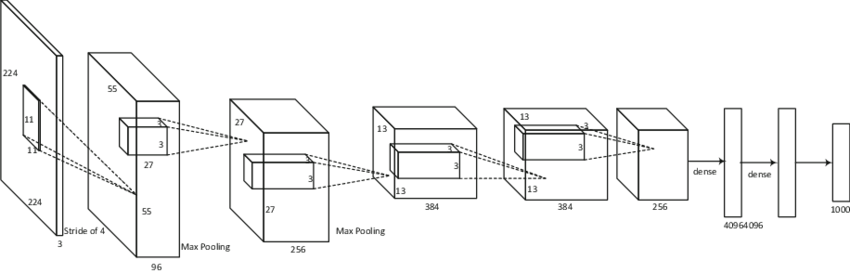

I think a lot of people (myself included) build up an intuition about how to structure a network from existing models. Take AlexNet as an example; linked is a nice, concise walkthrough of structure and reasoning.

AlexNet structure.

Preventing Overfitting

Often we see batch norm applied after early layers in the network, say after a set of conv/pool/activation steps since this normalization step is fairly quick and reduces the amount by which hidden weight values shift around. Dropout layers often come near the end of the network; placing them in between fully-connected layers for example can prevent any node in those layers from overly-dominating.

Convolutional and Pooling Layers

As far as conv/pool structure, I would again recommend looking at existing architectures, since many people have already done the work of throwing things together and seeing what works. In general, more layers = you can see more complex structures, but you should always consider the size and complexity of your training data (many layers may not be necessary for a simple task).

As You Learn

When you are first learning about CNN's for classification or any other task, you can improve your intuition about model design by approaching a simple task (such as clothing classification) and quickly trying out new approaches. You are encouraged to:

- Change the number of convolutional layers and see what happens

- Increase the size of convolutional kernels for larger images

- Change loss/optimization functions to see how your model responds (especially change your hyperparameters such as learning rate and see what happens -- you will learn more about hyperparameters in the second module of this course)

- Add layers to prevent overfitting

- Change the batch_size of your data loader to see how larger batch sizes can affect your training

Always watch how much and how quickly your model loss decreases, and learn from improvements as well as mistakes!